Ls-dyna Job Submission:

- Running ls-dyna in single cpu

- Running ls-dyna in multiple cpu

Note:

- Result obtained from single cpu and multiple cpu may vary. For details please check

- It was tested for same problem, running on single cpu requires 7 hrs to complete, whereas running in 8 processor requires 3 hr to complete the job whereas running in 60 processor requires 84 hr to complete the job (due to job distribution among the processors)

Ls-dyna single cpu job submission:

- Create a Directory like dyna_singlecpu

- Place your source file there (script and input file)

- In vi editor write a script file like this

#your script begins here

#!/bin/sh

#name the program

#PBS -N dyna_singlecpu

#default output and error file

#PBS -o output_dyna

#PBS -e error_dyna

#asking for 1 node and 1 processor for 50 hours

#PBS -l nodes=1:ppn=1,walltime=50:00:00

#set your directory path, you can see it by "pwd"

#PBS -d /home/au_user_id/dyna_singlecpu/

#follwing 2 line ensures you'll be notified by email when your job is done

#PBS -M au_user_id@auburn.edu

#PBS -m e

#printing hostname just to see which compute node is working and initial #time

/bin/hostname > output

date >> output

/opt/ls-dyna/ls-dyna.singlecpu I=li.k >> output

#time stamping to see how long it took

date >> output

#end of your script

- Make sure it is executable by "chmod 744 file_name.sh"

- Submit the file using "qsub ./file_name.sh"

- Give a "showq" to see if your job is running

- You can terminate the session using "exit" but your job will be keep on running

- You can login anytime to see if your results has been generated (you'll be notified by email)

Ls-dyna multiple cpu job submission:

- Create a Directory like dyna_multicpu

- Place your files there (script and input file)

- In vi editor write a script file like this

#your script begins here

#!/bin/sh

#Name the program

#PBS -N dyna_multiplecpu

#default output and error file

#PBS -o output_dyna

#PBS -e error_dyna

#asking for 1 node 10 processor for 50 hours

#PBS -l nodes=1:ppn=10,walltime=50:00:00

#set the directory, you can find your current directory by "pwd" command

#PBS -d /home/au_user_id/dyna_testing/

#following 2 lines ensures you'll be notified by email after job is done

#PBS -M au_user_id@auburn.edu

#PBS -m e

#loading path

export PATH=/opt/mpich2-1.2.1p1/bin:$PATH

#printing the host name just to see which host is performing computation

/bin/hostname > output

#initial timestamp

date >> output

#writing mpd_nodes file to boot mpd and assigning variable nhosts

sort -u $PBS_NODEFILE > mpd_nodes

nhosts=`cat mpd_nodes | wc -l`

#dynamically creating pfile

cp /dev/null pfile

export TMPDIR=`pwd`/local

echo "general { nodump }" | cat > pfile

echo "dir { global $PBS_O_WORKDIR local $TMPDIR }" >> pfile

#booting mpd

mpdboot -n $nhosts -v -f mpd_nodes

#starting mpp ls-dyna with 10 processor

mpirun -np 10 /opt/ls-dyna/ls-dyna I=li.k p=pfile >> output

#time stamping end time

date >> output

#stopping mpd

mpdallexit

#end of your script

- Make sure it is executable by "chmod 744 file_name.sh"

- Submit the file using "qsub ./file_name.sh"

- Give a "showq" to see if your job is running

- You can terminate the session using "exit" but your job will be keep on running

- You can login anytime to see if your results has been generated (you'll be notified by email)

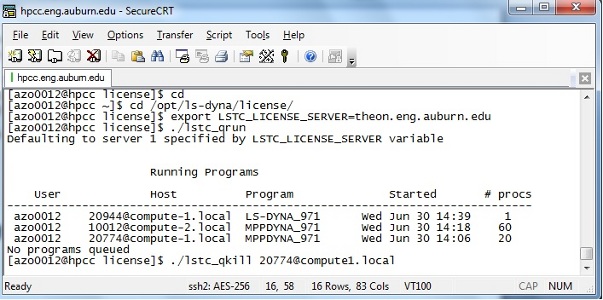

LSTC maintaining different queue:

- If for some reason you cancel the job using "canceljob jobid" and for certainly you won't see your job in Torque queue "showq" but it keeps on running in LSTC queue

- In that case you have to delete this job manually and procedure is given below

- cd

- cd /opt/ls-dyna/license

- export LSTC_LICENSE_SERVER=theon.eng.auburn.edu

- You can see what/how many job you are running by "./lstc_qrun"

- You can kill your job using "./lstc_qkill your_lstc_job_id@assigned_compute_node.local"

Note:

- You can increase memory in input file using "*KEYWORD memory=209m"

- You can use "*CONTROL_MPP_DECOMPOSITION_DISTRIBUTE_ALE_ELEMENT" in your input file for even distribution

- You can also use NUMPROC parameter in input/pfile

Running two or more Ls-dyna job simultaneously:

For single cpu Ls-dyna, you can submit 2 job simultaneously, it does not require any additional change in script

For MPP-dyna, you need to change your script like this

- Comment following 2 lines (in your script)using # sign in front of them

`sort -u $PBS_NODEFILE > mpd_nodes`

nhosts=`cat mpd_nodes | wc -l`

- Comment

mpdboot -n $nhosts -v -f mpd_nodes

- Comment last line

mpdallexit

- Using vi editor write a mpd_nodes file that has following 4 lines and save it

compute-1

compute-2

compute-3

compute-4

- ssh to compute-1 using

ssh compute-1

- To start mpd manually execute the following command

/opt/mpich2-1.2.1p1/bin/mpdboot -n 4 -v -f mpd_nodes

- Type "exit" to exit from compute-1

- change everywhere pfile to pfile1 for script1 and pfile2 for script2, so there will be no clash

- You could keep pfile unchanged while submitting it from different directory which is more preferable and your files will be generated in different folder, in that case you need to submit 2 different script from 2 different directory and change the directory name in your script file

#PBS -d /home/au_user_id/dyna_testing/

- Submit your jobs using qsub ./script.sh

- When all of your job is done, ssh compute-1 and type "/opt/mpich2-1.2.1p1/bin/mpdallexit" to stop mpd manually

Ls-dyna helpful links:

https://wiki.anl.gov/tracc/LS-DYNA

http://www.osc.edu/supercomputing/software/docs/lsdyna/struct.pdf

http://www.osc.edu/supercomputing/software/docs/lsdyna/keyword.pdf

http://www.osc.edu/supercomputing/software/docs/lsdyna/theory.pdf

http://www.osc.edu/supercomputing/software/docs/lsdyna/prepost-tutorial.pdf

http://www.mne.psu.edu/cimbala/Learning/Fluent/fluent_2D_Couette_flow.pdf