Auburn researcher shows how artificial intelligence can be fooled

Published: Apr 18, 2018 7:30 AM

By Chris Anthony

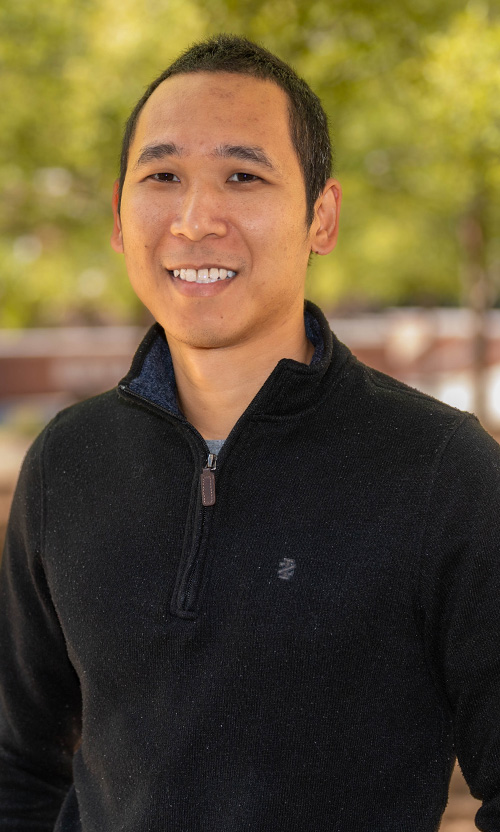

Anh Nguyen, assistant professor of computer science and software engineering, recently discussed his research on a subset of artificial intelligence known as deep learning with Horizons, a Swiss research magazine.

The story highlights the ways in which experts can fool deep neural networks, the artificial networks made up of layers of virtual neurons. Nguyen and his fellow researchers have created images that are unrecognizable to humans, but deep neural networks mistakenly believe with near certainty that they are familiar objects.

Follow @anh_ng8For instance, an image showing alternating yellow and black horizontal lines can be mistakenly identified as a school bus by deep neural networks.

Recent studies have also found that making a small change to an image – one that is imperceptible to humans – can drastically alter how deep neural networks identify what is depicted in the image.

This fooling phenomenon poses serious concerns regarding the security and reliability of real-world machine learning applications, such as self-driving cars.

From the Horizons magazine story:

“Researchers are gradually beginning to understand why these mistakes occur. One reason is that the programs are trained with a limited volume of exemplary data. If they are then confronted with very different cases, things can sometimes go wrong. One further reason for their failure is the fact that DNNs don’t learn the structurally correct rendering of objects. ‘A real image of a four-legged zebra will be classified as a zebra,’ explains Nguyen. ‘But if you add further legs to the zebra in the picture, the DNN tends to be even more certain that it’s a zebra – even if the animal has eight legs.’

The problem is that the DNNs ignore the overall structure of the images. Instead, their process of recognition is based on details of colour and form. At least this was the finding from the initial studies conducted into how DNNs function.

In order to suss out the secrets of neural networks, Nguyen and other researchers are using visualisation techniques. They mark which virtual neurons react to which characteristics of images. One of the results they found was that the first layers of DNNs in general learn the basic characteristics of the training data, as Nguyen explains. In the case of images, that could mean colours and lines, for example. The deeper you penetrate into a neural network, the more combinations occur among the information that has been captured. The second layer already registers contours and shadings, and the deeper layers are then concerned with recognising the actual objects.”

Media Contact: , chris.anthony@auburn.edu, 334.844.3447

These images were able to fool deep neural networks into thinking they were something they were not.